Introduction

As artificial intelligence evolves at an astonishing pace, traditional computing architectures are beginning to show their limitations. The explosive growth in data, the demand for real-time decision making, and the need for energy-efficient hardware have pushed researchers to rethink how computers should operate. Enter neuromorphic computing – a revolutionary approach inspired directly by the structure and function of the human brain. While still emerging, neuromorphic systems promise to redefine how machines learn, adapt, and interact with the world.

Definition

Neuromorphic computing is an approach to designing computer systems that mimic the structure and function of the human brain, using networks of artificial neurons and synapses to process information in a highly parallel, energy-efficient manner. It aims to replicate brain-like features such as event-driven computation, adaptability, and low power consumption, enabling faster and more efficient processing for tasks like pattern recognition, sensory processing, and autonomous decision-making.

What Is Neuromorphic Computing?

Neuromorphic computing refers to hardware and software systems designed to mimic the neural architecture of the biological brain. Instead of the conventional von Neumann architecture – where memory and processing are physically separated – neuromorphic systems integrate memory and computation in a highly parallel, distributed network, just like neurons and synapses in the human brain.

Traditional computers excel at arithmetic, precision tasks, and sequential operations. But they struggle with problems the brain solves effortlessly: pattern recognition, contextual understanding, fluid adaptation, and learning from sparse data. Neuromorphic computing aims to bridge this gap by creating hardware that behaves more like a brain.

At the core of neuromorphic systems are spiking neural networks (SNNs). Unlike artificial neural networks, which use continuous values, SNNs use discrete “spikes” that propagate signals only when needed. This event-driven signaling drastically reduces power consumption and enables real-time responsiveness.

Why the Brain Is the Ultimate Blueprint

The human brain is often described as the most advanced computing system known. It contains roughly 86 billion neurons, each connected to thousands of others, yet it operates on approximately 20 watts of power – less than many lightbulbs.

Its secret lies in parallelism and locality:

- Parallel processing allows millions of neurons to fire concurrently.

- Local memory storage (synapses) eliminates the bottleneck of shuttling data back and forth.

Neuromorphic computing takes these principles and designs circuits that emulate them. This bio-inspired architecture yields several standout advantages:

1. Ultra-Low Power Consumption

Since SNNs activate only when events occur, neuromorphic chips can remain idle until something triggers them. This behavior is fundamentally different from GPUs or CPUs, which consume energy even at rest.

2. High Speed Through Parallelism

Neuromorphic systems process information in parallel. This allows them to handle real-time sensory data – vision, sound, touch – much more efficiently than sequential architectures.

3. On-Chip Learning

Unlike traditional machine learning models that require massive datasets and offline training, neuromorphic chips can learn on the fly. Some architectures even support plasticity, meaning synapses adjust their weights in real time based on experience.

4. Robustness and Fault Tolerance

Biological brains remain functional despite noise, missing data, or even neuron loss. Neuromorphic systems inherit similar resilience.

Key Players and Breakthroughs

Several major research institutions and companies are developing neuromorphic hardware:

IBM TrueNorth:

One of the earliest large-scale neuromorphic chips, TrueNorth contains 1 million neurons and 256 million synapses. It demonstrated unprecedented energy efficiency and low-power pattern recognition.

Intel Loihi:

Intel’s Loihi series represents the most advanced neuromorphic hardware available today. Loihi supports on-chip learning, spike-driven computation, and specialized plasticity rules. Its third-generation chip further improves scalability and bio-realism.

BrainScaleS (Human Brain Project):

Unlike CMOS-based neuromorphic chips, BrainScaleS uses analog circuits to emulate neuron behavior at extremely fast speeds – up to 10,000 times faster than real biological time.

SpiNNaker:

Developed by the University of Manchester, SpiNNaker uses a million ARM processors to simulate large-scale neural networks in real time.

These platforms serve as testbeds for research in AI, neuroscience, robotics, and edge computing.

Neuromorphic Computing vs. Traditional AI

Today’s AI is powered mostly by deep learning running on GPUs or TPUs. While powerful, these systems face growing issues:

- High energy consumption

- Dependence on cloud infrastructure

- Long training cycles

- Lack of adaptability

- Limited biological realism

Neuromorphic computing addresses these challenges by offering:

- Faster adaptation

- Event-driven processing

- Efficient on-device learning

- Scalability inspired by neural biology

- Potential for real-time autonomous behavior

While traditional deep learning will continue to dominate most applications for now, neuromorphic computing is carving a niche where brain-like abilities are essential.

Real-World Applications on the Horizon

Although neuromorphic technology is still maturing, several promising use cases are emerging.

1. Edge AI and IoT Devices

Smart sensors, wearables, and autonomous systems require local intelligence without draining power. Neuromorphic chips can process sensory data—images, audio, vibrations—directly on device.

2. Robotics

Robots need fast reflexes, perception, and continuous learning. Neuromorphic processors could enable truly adaptive robots capable of operating safely in dynamic real-world environments.

3. Brain–Machine Interfaces

Neuromorphic hardware’s compatibility with biological signaling makes it ideal for reading, interpreting, and simulating neural activity.

4. Neuromorphic Vision Systems

Event-based cameras paired with SNNs provide ultra-fast, low-power image recognition, excellent for drones, self-driving cars, and industrial automation.

5. Scientific Modeling

Researchers can use neuromorphic platforms to simulate brain circuits and explore neurological diseases, cognition, and learning mechanisms.

6. Security and Anomaly Detection

Because neuromorphic systems excel at pattern recognition and adaptation, they are useful for detecting unusual behavior in cybersecurity and sensor networks.

Challenges Ahead

Despite its promise, neuromorphic computing faces several hurdles:

- Lack of standardized programming frameworks

Most neuromorphic chips require unique coding models and tools. - Difficulty integrating with existing AI workflows

Deep learning dominates the field, and SNNs are not yet drop-in replacements. - Hardware constraints and scalability issues

Fabricating large-scale, reliable neuromorphic systems is a major engineering challenge. - Limited public datasets and benchmarks for SNNs

The ecosystem is still young compared to traditional AI.

However, as research accelerates and more companies invest in neuromorphic designs, these barriers are gradually diminishing.

The Future of Neuromorphic Computing

Neuromorphic computing is not just a new type of chip – it represents a foundational shift in how we think about computation itself. As energy demands escalate and AI becomes more deeply embedded in our lives, architectures that emulate the brain’s efficiency will become increasingly essential.

Over the next decade, we can expect:

- Hybrid systems combining neuromorphic and conventional processors

- Growth of neuromorphic cloud platforms for research

- Expansion into consumer electronics

- New learning algorithms optimized for SNNs

- Deeper integration with robotics and autonomy

While neuromorphic computing won’t replace traditional AI entirely, it will complement it – providing the adaptability, efficiency, and intelligence needed for the next generation of smart machines.

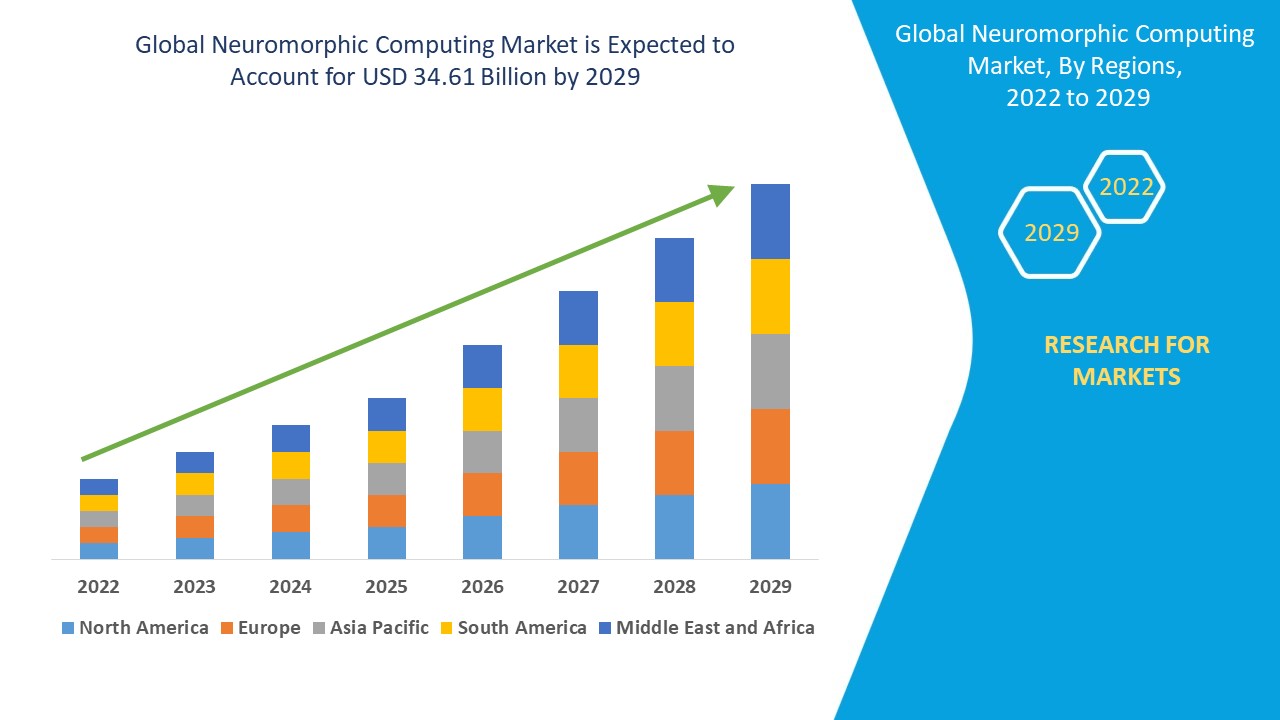

Growth Rate of Neuromorphic Computing Market

According to Data Bridge Market Research, the neuromorphic computing market was estimated to be worth USD 28.30 billion in 2024 and is expected to grow at a compound annual growth rate (CAGR) of 34.20% from 2025 to 2032, reaching USD 297.72 billion.

Learn More: https://www.databridgemarketresearch.com/reports/global-neuromorphic-computing-market

Conclusion

Neuromorphic computing represents a transformative step toward building machines that learn and adapt with the efficiency of the human brain. By mimicking neural architecture, it offers unparalleled advantages in speed, power consumption, and real-time learning—capabilities that traditional computing struggles to achieve. As research advances and applications expand, neuromorphic systems are poised to play a pivotal role in the future of AI, powering smarter, more intuitive, and more energy-efficient technologies across industries.